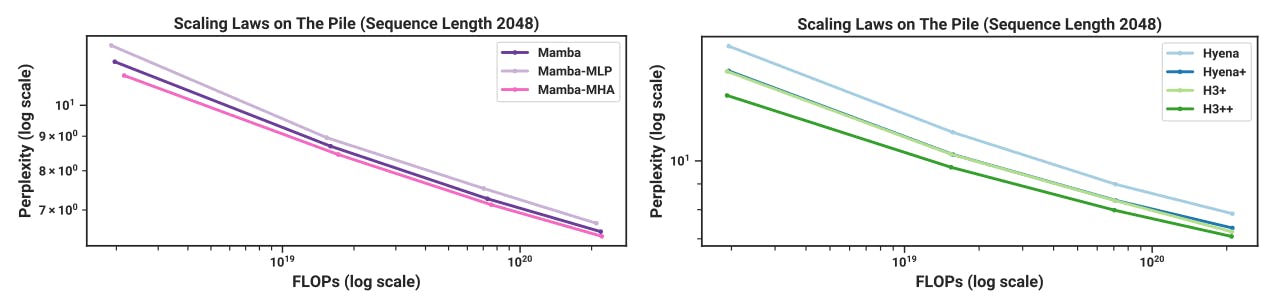

How Mamba and Hyena Are Changing the Way AI Learns and Remembers

18 Dec 2024

Explore detailed experimental results from cutting-edge AI architectures, including Selective Copying, Induction Heads, and innovative models like Mamba and Hye

Hardware-Aware Algorithm for Selective State Space Models

18 Dec 2024

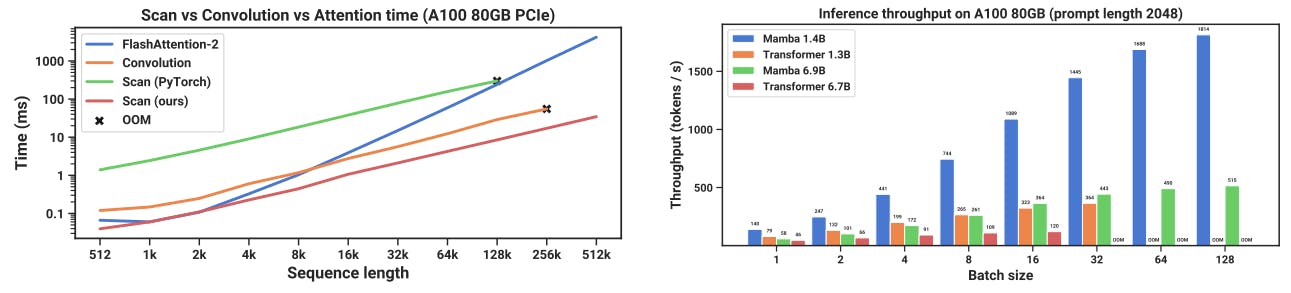

Selective SSMs achieve up to 7× faster processing than attention and optimal memory efficiency with kernel fusion and recomputation on modern GPUs.

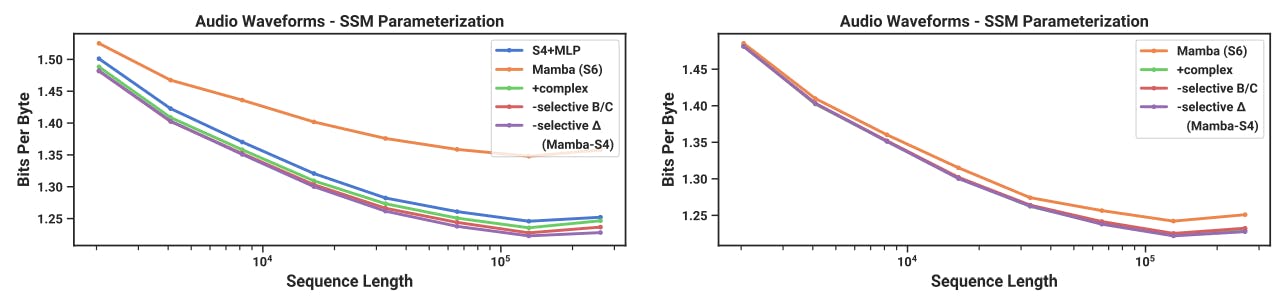

The Math Behind Selective State Space Models

18 Dec 2024

Explore the mechanics of Selective SSMs, focusing on discretization, learnable biases, and zero-order hold formulas for efficient AI modeling.

AI That Remembers

18 Dec 2024

Discover how new AI models handle longer tasks, improve learning, and bring smarter, faster performance to the next generation of technology.

Why Selection Mechanisms Are Key to the Future of Sequence Modeling

18 Dec 2024

Explore the concept of selection mechanisms in neural networks, distinguishing them from traditional gating, hypernetworks, and data-dependence.

Why Mamba Could Be the Future of Big Data Processing

18 Dec 2024

Mamba introduces a selection mechanism to structured state space models, enabling context-dependent reasoning with linear scaling in sequence length.

Why Scaling Mamba Beyond Small Models Could Lead to New Challenges

17 Dec 2024

Mamba introduces transformative concepts in language modeling with its selective SSMs, but the scaling of these models faces engineering challenges.

How Selective State Space Models Boost Mamba’s Performance

17 Dec 2024

Mamba’s architecture is enhanced by the selective SSM layer, yielding significant performance improvements.

How Mamba’s Design Makes AI Up to 40x Faster

17 Dec 2024

Mamba outperforms FlashAttention-2 and Transformers in both speed and memory efficiency, offering up to 20-40x faster inference throughput.